AI at Your Fingertips: Jarvis Has Arrived – Kyobo Securities

This report is an English translation of a report by Kyobo Securities in Korea.

Summary

Accelerating Development and the Democratization of AI Model Development

With rapid advancements, improvements in inference performance, cost-effective and lightweight designs, and accelerated performance enhancements, the adoption and commercialization of AI agents are expected to occur swiftly. Crucially, the entire industry is learning from and mutually accelerating the innovations achieved by each model. As companies face a lighter burden for adopting AI, from large enterprises to startups, the pace of developing AI tools or integrating AI into services is quickening. Considering the intensifying global competition and the robust cash flow of major global tech companies, capital expenditures related to AI are highly likely to increase.

Era of Edge AI Computing and Continuous Expansion of Semiconductor Demand

The Edge AI computing market is projected to grow, led by smartphones, automobiles, and PCs, with users’ AI experiences expanding through a wider array of devices. Smartphones are expected to take the lead initially, followed by personal computers capable of processing even more powerful AI models. In the automotive sector, as autonomous driving functions expand, AI capabilities across various devices are anticipated to continuously grow.

Predicted Changes in Memory Technology through AI Democratization

Memory technology is expected to undergo changes driven by the democratization of AI. ASICs, designed for optimized services with simplified circuitry and low power consumption, are delivering optimal performance at low production costs, which is driving increased demand from global tech giants. SOCAMM, a next-generation memory module proposed by NVIDIA and aimed at the high-performance computing and AI markets, is expected to spur new growth as a “second HBM” with the expansion of the edge computing market. Mobile DRAMs, such as LPW/LLW, are anticipated to achieve high-performance on-device AI by specializing in speed and efficiency.

Rising Semiconductor Demand in the AI Agent Era

In the era of AI agents, semiconductor demand is bound to increase continuously. Strong demand from North American CSPs and China is maintaining a robust global AI infrastructure investment trend. Additionally, the expansion of edge AI devices (including on-device applications) is expected to boost the adoption of high-speed, high-capacity memory. Memory prices are projected to strengthen by 2Q25, and proactive inventory buildup by customers is expected to signal an industry recovery.

Prerequisite for ‘Conversations between AIs’: Advanced AI Integration within Legacy Software

In the era of AI agents, individual software companies must focus on enabling ‘conversations between AIs.’ For AI agents to effectively interact with various platforms and software, building AI-friendly metadata is essential, and advanced AI integration within legacy software is a critical task to prevent isolation. Consequently, both domestic and international platform and software companies are rapidly internalizing AI into their services through flexible methods—either by leveraging their own models or interfacing with major external AI models. Initially, they aim to 1) enhance the level of AI application within their services, and 2) subsequently secure accessibility through collaborations, such as opening APIs with major AI agents (e.g., Chat-GPT, Gemini, Perplexity) that are expected to serve as ‘portals.’ In the advertising domain, companies like Meta, and in commerce, companies like Shopify and Amazon, are swiftly integrating AI into their services. Among domestic companies, NAVER’s On-Service AI strategy is anticipated to advance rapidly and achieve profitability.

Key Chart

1. Democratization of the AI Era

1-1. My Own AI Agent

An AI agent is defined as a software program that autonomously performs tasks based on given objectives. In other words, it is an artificial intelligence system capable of understanding and assessing situations to determine appropriate actions without explicit user instructions. With recent advancements in large language models (LLMs) and similar technologies, the comprehension and generative abilities of AI agents have improved significantly. This evolution is transforming them from simple chatbots into agents capable of executing complex, multi-step tasks. Such a shift represents a transition from merely providing informational responses to taking concrete actions, showcasing a future in which AI agents handle tasks on behalf of users by leveraging a variety of tools.

The core concept of an AI agent is its ability to autonomously manage tasks for the user. For instance, OpenAI CEO Sam Altman has mentioned that in the future, users might possess an agent that acts like an “extended alter-ego,” automatically drafting email responses as needed. On March 11, during Oracle’s earnings call, it was forecasted that by reinforcing the role of AI agents and enhancing AI functionalities within Oracle’s SaaS offerings (such as ERP, HCM, SCM, etc.), the company could achieve cost reductions and efficiency improvements in the healthcare and insurance sectors—thereby accelerating future revenue growth driven by robust AI and cloud infrastructure expansion. Such agents would operate like seasoned assistants on standby, reading and responding to emails, managing schedules, and gathering information independently under the user’s guidance. In this way, AI agents are evolving beyond merely answering queries to acting as proxies that remember context and execute continuous tasks.

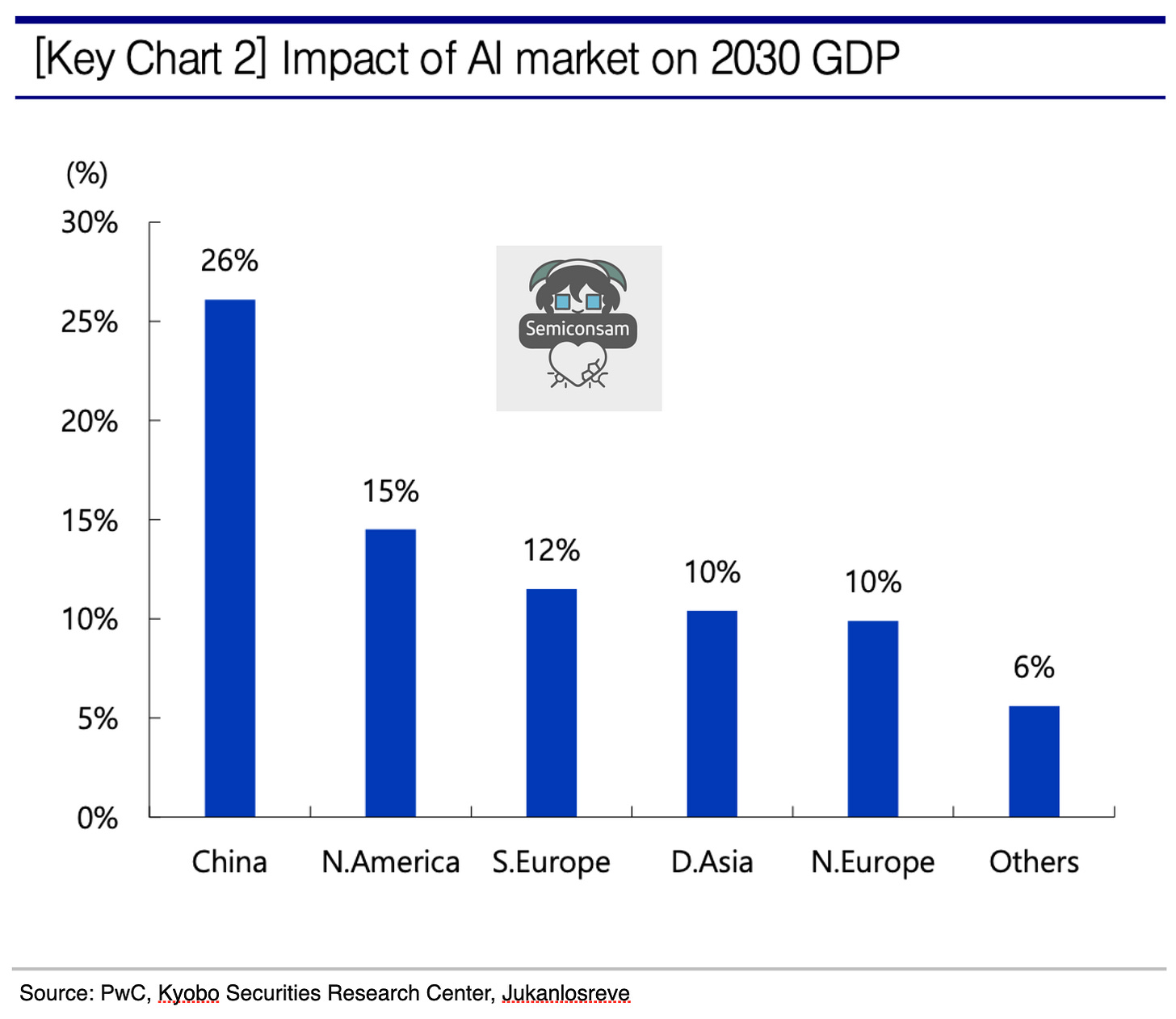

The global AI agent market is projected to grow from approximately US $5.3 billion in 2024 to US $47 billion by 2030, with an impressive compound annual growth rate (CAGR) of 43.9%. In particular, as advancements in natural language processing allow AI agents to better understand context and handle complex queries, their adoption is accelerating across various sectors such as customer service, healthcare, and finance. The productivity gains and shifts in consumption structures resulting from AI adoption are expected to have significant impacts on national GDP growth, corporate cost reductions, and increased profitability. According to industry data, by 2030 the GDP contributions attributable to AI are forecasted to be 26% for China, 15% for North America, 12% for Southern Europe, and 10% for Asia. China, with its high manufacturing share of GDP and proactive capital reinvestment, is anticipated to differentiate its medium- to long-term growth through a virtuous cycle of technology investment, enhanced productivity, reinvestment, and subsequent growth. In South Korea, data from the Ministry of Science and ICT indicates that if AI is successfully integrated across the economy by 2026, cost savings of 187 trillion KRW and revenue increases of 123 trillion KRW could be achieved. Notably, a survey among large domestic companies shows that 88% are either actively adopting or considering AI adoption, suggesting that the societal structural transformation driven by AI will accelerate. Moreover, a global survey found that the proportion of organizations implementing AI in at least one business area rose from 50% in 2020 to 72% in 2024, with over 90% of respondents having used generative AI at least once. Particularly in departments such as human resources, legal, and IT, the cost-saving and revenue-enhancing benefits of AI are becoming increasingly tangible, indicating that both the scope and depth of AI utilization will continue to expand in the future.

The Technological Foundation of AI Agents: The Trinity of Models, Algorithms, and Hardware

The technological foundation of AI agents comprises three key elements. First, the AI model acts as the brain. Typically, large-scale deep learning models (e.g., GPT-series language models, computer vision models, etc.) are responsible for the agent’s perception and decision-making, and continuous advancements are enhancing their ability to reason and learn in a human-like manner.

Second, learning algorithms are crucial. In addition to supervised and unsupervised learning, reinforcement learning enables agents to interact with their environment and learn optimal behavioral strategies. For example, autonomous driving agents refine their decision-making in real-world driving scenarios through reinforcement learning. Moreover, agents that improve their performance after deployment through federated learning or online learning techniques are also under active research.

Third, robust hardware support is essential for the smooth operation of these AI models. Accelerators like GPUs and TPUs in cloud data centers make it possible to train and run large models, while NPUs (neural processing units) in smartphones and AI chips in IoT devices support real-time, on-device computation for AI agents. For instance, the latest smartphones come equipped with dedicated AI processors that execute tasks such as image recognition and voice assistance directly on the device.

On the strategic and environmental front, establishing an ecosystem through API integrations with other applications and models is indispensable. Even if AI agents are rapidly deployed on-device and exhibit high inference capabilities, they will lack competitiveness unless an ecosystem is built that effectively addresses users’ needs. The development of an ecosystem—through enhanced interoperability with applications for shopping, reservations, content, and more—will be a critical prerequisite for the formation of truly effective AI agents. It is anticipated that only software or platforms that remain accessible will survive if on-device AI agents come to dominate user interactions.

1-2. On-Device AI + On-Service AI = AI Agent

Big tech companies are combining on-device AI with cloud AI in a hybrid approach that overcomes the limitations of individual devices and ultimately envisions market expansion through the convergence of these distinct areas.

AI agents can be classified based on their operating environment into two categories: on-device execution (On-Device AI) and cloud-based execution (On-Service AI).

• On-Device AI

On-Device AI executes models directly on devices such as smartphones or PCs, delivering rapid responses without network latency and offering the advantage of protecting personal data from external exposure. However, due to limited hardware resources, there are constraints when running very large models or computationally intensive tasks, and maintaining a consistent user experience across different devices can be challenging.

• Cloud AI Agents

Cloud AI agents leverage the abundant resources available on servers, making it feasible to implement high-performance, large-scale models and apply updates frequently. Nonetheless, they require a constant internet connection and are subject to latency and privacy concerns.

On-device AI contributes to boosting device sales, while cloud-based services drive cloud revenue, with big tech aiming to secure competitive advantages in both areas. Although building an integrated ecosystem of devices and services might seem like a competitive struggle, it is more rationally seen as a complementary strategy.

Areas that are not fully addressed by on-device AI—traditionally managed by numerous existing platforms and software—are likely to be taken over by software companies that have thrived in the new ecosystem. These companies are expected to be those that already generate continuous UGC content and e-commerce transactions through established traffic and community relationships. Among these, on-service AI providers that have advanced the integration of AI into their software are considered more likely to succeed. To harness AI effectively, it is essential to build AI-friendly metadata that allows the platform’s data to be leveraged by AI. Thus, to 1) enhance interoperability with AI agents and 2) ensure that the platform remains indispensable to AI agents, each platform and software is expected to prioritize the establishment of on-service AI capabilities.

Due to these strengths and weaknesses, there has been growing attention toward a hybrid agent architecture that combines the speed and privacy of on-device processing with the performance and scalability of cloud solutions. For example, Apple is integrating a compact on-device language model (approximately 3 billion parameters) with a large server-side language model, employing a system where personalized tasks are handled locally and more complex requests are processed in the cloud. In the future, agents are expected to optimize the user experience through a collaborative effort between devices and the cloud.

Big tech companies’ strategy to expand AI-enabled devices harnesses both the complementary advantages and competitive aspects of on-device and on-service AI. Apple and Samsung emphasize device independence through on-device AI, while Microsoft and Amazon leverage their cloud strengths with on-service AI, converging these approaches into a hybrid model. However, intense competition is emerging over leadership in chips, cloud infrastructure, and ecosystem control. By 2025, with advancements in 5G/6G and AI chips, this strategy is likely to extend beyond the device market into smart homes, automobiles, and other sectors, potentially reshaping the entire industry.

1-3. The Accelerated Timeline for the Adoption/Commercialization of AI Agents

1. The miniaturization of AI, 2) accelerated performance improvements, and 3) rapid advancements in vertical AI functionalities

Competition to enhance AI models’ inference performance, reduce costs and model size, and achieve low latency is ongoing, and optimization efforts have led to a diversification of AI services across different sectors. In this context, as lightweight, low-cost AI agents with high inference capabilities are deployed on-device, the adoption and commercialization of AI agents are expected to accelerate rapidly.

The rapid development of leading frontier models has elevated the overall intelligence level—including inference capabilities—and initiated a virtuous cycle whereby the AI industry as a whole quickly adopts improved methods for optimizing both learning and inference. As illustrated in the chart, the time lag for other companies to catch up to the inference improvements made by frontrunners like OpenAI has significantly diminished, further accelerating performance enhancements. Additionally, the industry is swiftly learning techniques to reduce API call costs for models that achieve top-tier Intelligence Index ratings.

If an “AI agent” is defined as a system that solves problems through multi-stage inference and coordination with other applications or models, then not only have inference performance and cost issues been addressed, but the differentiation and rapid development of each vertical function have also been remarkable. Numerous examples already exist where high practical usability has been achieved in segmented areas—such as research/productivity, marketing, design, reservations, audio, video, and prompt generation—by optimally leveraging both in-house and external models.

In such an environment, the focus shifts beyond the competition in model performance—the “infrastructure”—to how effectively AI tools can be integrated into existing or new services to generate revenue. Big tech companies, which have already secured profit generation capabilities in advertising, commerce, and cloud services, are demonstrating a commitment to maintaining their investment pace. Guidance indicates that AI capital expenditures in 2025 are expected to be even larger than those in 2024. These companies are not only spearheading improvements in frontier model performance but are also vigorously working to integrate AI into their existing services to drive revenue growth.

1-4. Democratization of AI Model Development Following the Emergence of DeepSeek

After DeepSeek’s debut, the perception that AI model development is the exclusive domain of a few large corporations has become widespread, and advancements in AI technology are shifting toward service-oriented AI (i.e., On-Service AI), thereby altering market dynamics. Whereas only a handful of companies with vast capital and specialized expertise once developed and deployed AI, an increasing number of players now have easy access to these technologies.

In the case of DeepSeek, the approach focused on:

1. Allocating more resources to the inference process rather than the initial model training, and

2. Optimizing the utilization of resources during inference by employing a Mixture-of-Experts strategy.

This paradigm shift is being embraced not only in China but across the global AI industry, including by leading US companies. In fact, Bloomberg estimates that while the share of AI budgets allocated to training by hyperscalers is currently over 40% in 2024, it is expected to drop to 14% by 2032. Conversely, the investment in AI inference is projected to increase to 50%. The advantage of concentrating resources on inference—achieving both optimization and lightweight performance—is that the cost of running AI becomes more akin to a variable expense, as a greater number of API users share these costs, rather than burdening a few users with enormous fixed expenses.

Based on available data, the efforts of US and Chinese API providers to enhance inference efficiency—evidenced by declining per-token supply prices and improved processing speeds—are increasing the incentive for API-utilizing companies to adopt AI. Comparisons among major lightweight models with similar intelligence levels released in the US and China since DeepSeek reveal a trend of reduced API call costs and enhanced processing speeds.

A representative example is Alibaba’s QwQ-32B, scheduled for release in March 2025. This inference-centric AI model differentiates itself from conventional supervised learning models; according to Artificial Analysis, its Intelligence Index is 58, similar to DeepSeekR1’s 60. However, its API call cost is $0.50 per million tokens—half that of DeepSeekR1’s $1—and its processing speed is 86 tokens per second, more than three times that of DeepSeekR1 (26 tokens per second). Even before the emergence of DeepSeekR1, Google’s Gemini 2.0 Flashlight had already demonstrated outstanding performance in low-latency, lightweight operations with a cost of $0.20 per token and a processing speed of 256 tokens per second. Furthermore, Google’s open-source model, Gemma 3 (scheduled for release in March 2025 with 27 billion parameters), exhibits language capabilities comparable to DeepSeekR1’s ELO and inference performance (in areas such as MATH and GPQA Diamond) that is not significantly inferior to Gemini 2.0 Flashlight, despite utilizing fewer parameters than DeepSeekR1, Llama 3.3 (70B), or Qwen 2.5 (70B).

Due to these trends, 1) improvements in performance and cost driven by technological competition are accelerating as the entire market learns from one another, and 2) the easing of AI adoption burdens across companies—from large corporations to SMEs to startups—is speeding up the development of AI tools and the integration of AI into services by software providers.

This surge in investment not only boosts companies’ own capital expenditures but also acts as a driver for increased demand for cloud services among big tech firms in both the U.S. and China. As will be discussed later, U.S. big tech companies are improving their cash flows through growth in cloud revenues and profits driven by AI demand, along with expansion in their core businesses. This is likely to prompt them to further increase AI-related capital expenditures to counterbalance the pace of investment by Chinese companies.

In the case of Chinese firms, the competitive drive for AI investment is accelerating with robust government support. During the March 2025 sessions of the National People’s Congress and the CPPCC, a fund worth 1 trillion yuan (approximately 200 trillion won) was decided upon for investments in advanced technologies such as AI and quantum computing. Additionally, in January 2025, the state-owned Bank of China announced large-scale financial support for the development of the AI industry, with plans to provide special financial backing totaling 1 trillion yuan over the next five years.

1-5. Outlook for Cloud Monetization and AI Investment Amidst AI Market Growth

With the expansion of the AI market, free cash flow is improving, and in light of intensifying global competition driven by market growth, large-scale investments are expected to continue. For global big tech companies, (1) despite increased investments, cash flows have been balanced or even improved through growth in revenue and profits from their core businesses and cloud segments, and (2) considering the intensifying global competitive landscape—as reflected in each company’s guidance—it is highly likely that AI-related capital expenditures will increase.

Looking back at 2023–2024, (1) robust revenue and profit growth were observed among major global cloud service providers (CSPs) such as AWS, Azure, and Google Cloud, driven by rising cloud demand related to AI services, and (2) core businesses incorporating AI—ranging from advertising (Google, Meta) and commerce (Amazon) to B2B software (Microsoft) enhanced by advanced algorithms and the adoption of generative AI—also experienced growth. As a result, the free cash flow of these major big tech firms improved, even amidst increased capital expenditures on tangible assets.

In terms of cloud revenue growth, for 2Q25FY, Microsoft’s Azure achieved a year-over-year revenue growth rate of +31%, with AI service contributions growing at 13%, and overall AI service-related revenue exhibiting a YoY increase of +157%. AWS, the world’s largest CSP, recorded a YoY revenue growth of +18.5% for 2024, with its operating profit margin (OPM) improving by +9.9 percentage points annually. Among the major big tech companies, Google Cloud—previously characterized by a lower global market share—recorded a cloud segment deficit in 2022; however, its revenue grew by +25.9% and +30.6% YoY in 2023 and 2024, respectively, with its OPM rapidly improving from 5.2% in 2023 (a YoY turnaround into profitability) to 14.1% in 2024.

There are already many examples in segmented areas—ranging not only from research and productivity but also marketing, design, reservations, audio, video, and prompt generation—where optimal use of both in-house and external models has secured high practical usability. Given the ongoing trends in enhancing learning and inference efficiency as well as model miniaturization, the vertical differentiation of these AI tools is expected to accelerate further.

Furthermore, beyond the development of these vertical tools, from 2025 onward, the expanded penetration of AI agents—enabled by the widespread adoption of on-device AI (facilitating multi-step inference and coordination with external applications/models)—coupled with increased cloud demand from individual platforms and software advancing their AI adoption to avoid accessibility disparities, is expected to sustain the growth momentum of the cloud market.

Meanwhile, major US/Chinese big tech companies and xAI (a Tesla affiliate) are set to increase their investments in AI-related areas such as servers, data centers, and networking in 2025 compared to 2024. Reductions in pre-training and inference costs have led to lower per-token fees for model users, which is anticipated to stimulate overall market demand and drive monetization. As monetization becomes more achievable, sustainable long-term investments will also be possible, intensifying the competitive race among companies to enhance performance and secure their ecosystems. In fact, Alphabet, Microsoft, Amazon, and Meta have all indicated guidance for substantial increases in AI-related capital expenditures in 2025 relative to 2024.

Chinese big tech companies (including Alibaba, Tencent, Baidu, and ByteDance) are also expected to expand their AI investments with robust government support. Notably, in February 2025, Alibaba announced plans to invest 380 billion yuan (approximately 75 trillion KRW) over three years in cloud and AI sectors, and ByteDance is reported to have allocated over 12 billion dollars (approximately 17 trillion KRW) for AI-related capital investments in 2025.

2. The Era of Edge AI Computing

2-1. Hardware with Increasing AI Penetration: Enhancing User AI Experience Through Diverse Devices

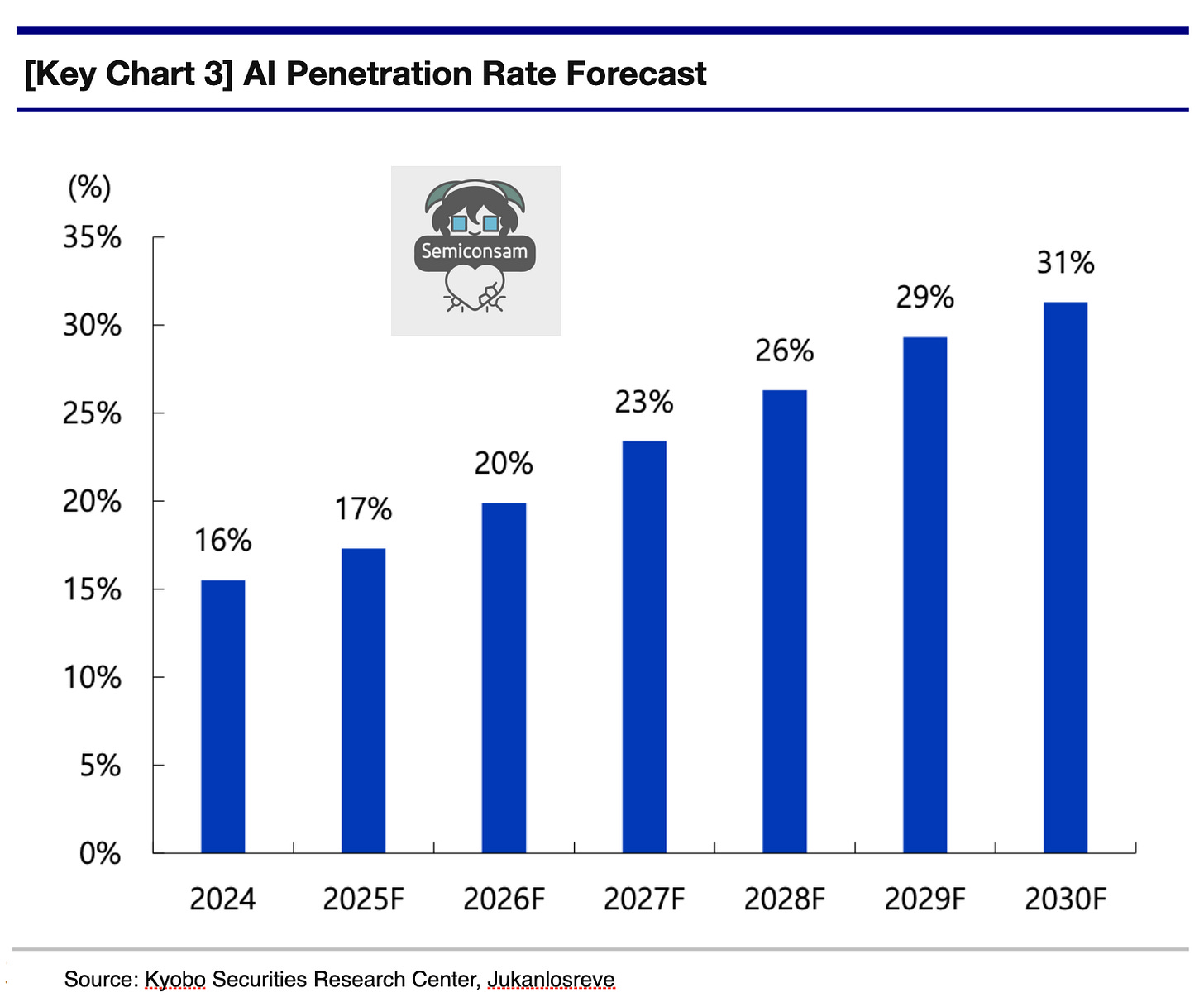

The Edge AI computing market is forecasted to grow at a CAGR of 28.8% through 2030, driven by smartphones, automobiles, and PCs, which will enhance the user AI experience through an expanding array of devices.

1. Smartphones:

The most widespread application remains smartphones, with the AI functionality penetration rate expected to exceed 50% after 2028. As the device most closely connected to consumers, smartphones will continue to serve as the mainstream platform for personalized AI.

2. PCs:

Changes in the PC market are also anticipated, as PCs are equipped with more powerful hardware than smartphones, enabling them to process complex AI models. NVIDIA, for instance, has unveiled the DGX Spark and DGX Station—personal AI supercomputers developed under Project DIGITS—with DGX Spark scheduled for release after the pre-order period in May 2025. Priced at around $3,000, these AI PCs are expected to extend their influence beyond cloud services into desktop and edge applications, with projections suggesting they will account for 53% of total PC shipments by 2027, marking 2025 as the true beginning of this transition.

3. Automobiles:

In the automotive sector, autonomous vehicles require on-board AI since network latency is unacceptable. Leading the market in this area are NVIDIA (with its DRIVE Orin), Tesla (using its proprietary FSD chip), and Horizon Robotics (with its Journey series), while BYD is expanding its market presence through the development of its own chips.

On-Device AI Is Poised to Drive Genuine Smartphone Replacement Demand

In the 2025 smartphone market, on-device AI is emerging as a core competitive element by emphasizing personalization, security, and low latency. This evolution in AI functionality is expected to spur increased smartphone replacement demand. Samsung’s Galaxy S25 series has enhanced its AI features in collaboration with Google, Apple is pursuing ecosystem integration through Apple Intelligence, and Huawei is expanding its self-reliant AI capabilities with HarmonyOS and its proprietary chips.

Samsung’s Galaxy S24 became the world’s first smartphone to integrate AI functions (including translation, summarization, and “Circle to Search”) and surpassed 30 million unit sales in its first year, marking the first such milestone since the Galaxy S10 in 2019. The Galaxy S25 is garnering acclaim in the market for its accelerated AI adoption. As 25% of S24 buyers cited AI as a key purchasing factor, similar consumer responses are anticipated for the S25.

Conversely, pre-sales of the iPhone 16 were 13% lower than those of its predecessor. Market feedback—citing delays in the Apple Intelligence (AI Siri upgrade) and insufficient multilingual support—suggested a decline in AI innovation. This prompted a major leadership reshuffle for overseeing AI technology research and development, reinforcing the notion that on-device AI is indeed driving tangible replacement demand.

By 2025, handset manufacturers are expanding AI services across both premium and mid-to-low range models. Historically, smartphone replacement demand was driven by form factor changes or significant technological breakthroughs; now, enhanced overall smartphone sales and increased AI penetration are expected as a direct result of these AI advancements.

NVIDIA Enters the AI Personal Computer Market

NVIDIA plans to broaden the AI landscape from cloud services to desktop and edge applications through its personal AI computer, DXG. This move is designed to leverage cost advantages and improve accessibility, thereby enabling AI developers, researchers, and students to access advanced AI tools. NVIDIA’s entry into the personal AI computer market is seen as an ambitious bid to drive the mass adoption of AI and to solidify its dominant position in the AI chip market.

At GTC2025, NVIDIA introduced names for its AI supercomputers—such as DGX Spark and DGX Station—originating from Project Digits showcased at CES this year. The DGX Spark is priced at an average of approximately $3,000, while the DGX Station’s price has not been officially disclosed but is estimated by some to be around $100,000, making these systems attractively priced for individual developers and small to medium-sized enterprises.

• DGX Spark

The GB10 Blackwell Superchip supports 5th-generation Tensor Cores and FP4 operations—capable of performing up to 1,000 trillion operations per second. It comes equipped with 128GB of unified memory and up to 4TB of NVMe SSD storage. Furthermore, the GB10 Superchip employs NVIDIA NVLink™-C2C interconnect technology to enhance CPU and GPU memory performance. Fully integrated with NVIDIA’s AI software stack, it allows users to seamlessly migrate models to DGX Cloud or other cloud and data center infrastructures with minimal code modifications.

• DGX Station

The DGX Station is a compact AI server that delivers data center-level AI computing performance in a desktop form factor, making it well-suited for enterprise applications (such as in hospitals and corporate environments). It is the first desktop system to feature the GB300 Blackwell Ultra Superchip and provides 784GB of high-capacity memory to accelerate AI model training and inference workloads. Additionally, it incorporates NVIDIA’s ConnectX-8 SuperNIC networking technology, which supports speeds of up to 800 Gb/s.

These advancements indicate that both on-device AI in smartphones and NVIDIA’s push into personal AI computers are set to play pivotal roles in the evolving AI ecosystem, driving tangible consumer demand and enabling broader, cost-effective access to advanced AI technologies.

2-2. Democratization of AI, Key Technologies: ① ASIC ② SOCAMM ③ LLW/LPW

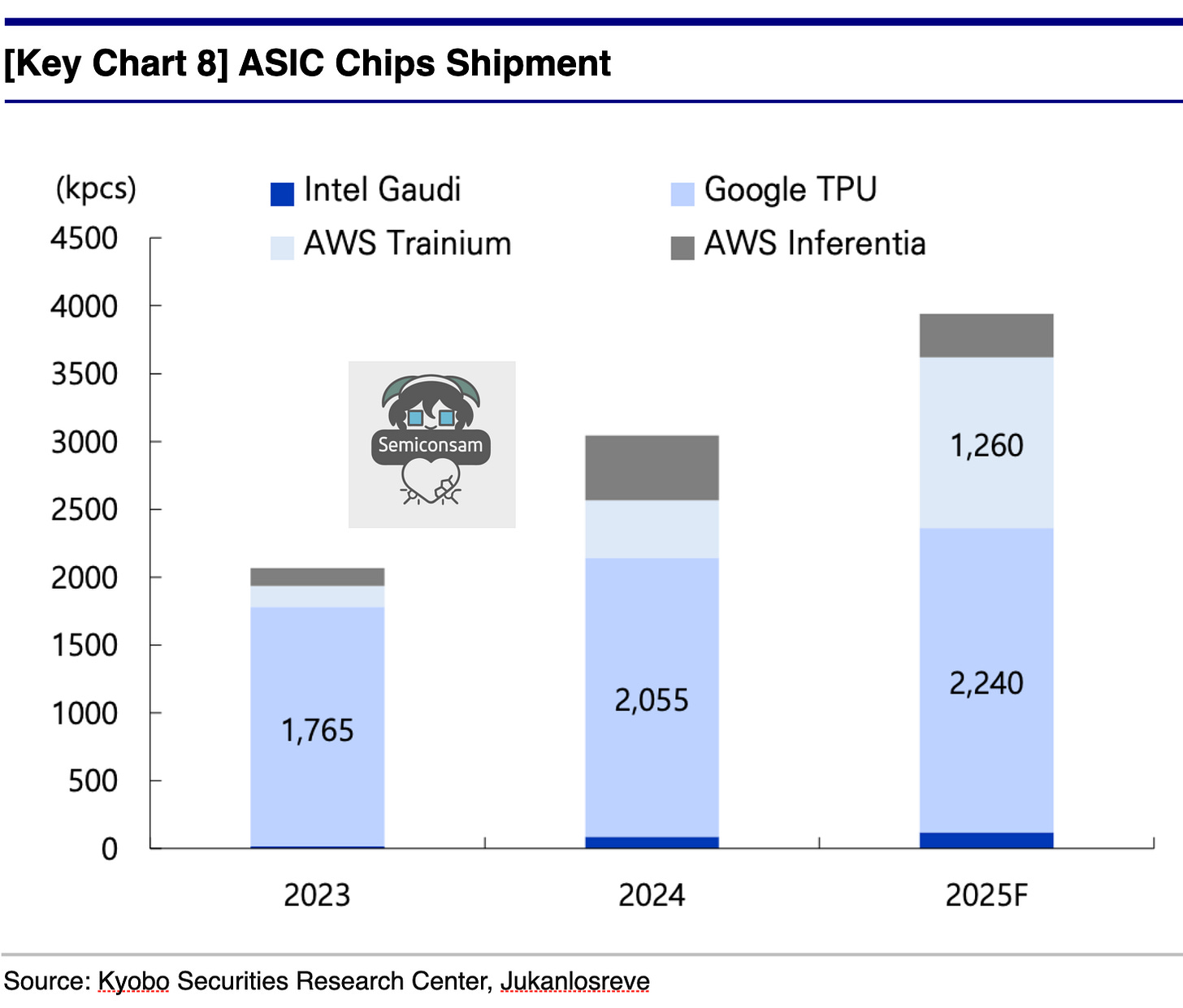

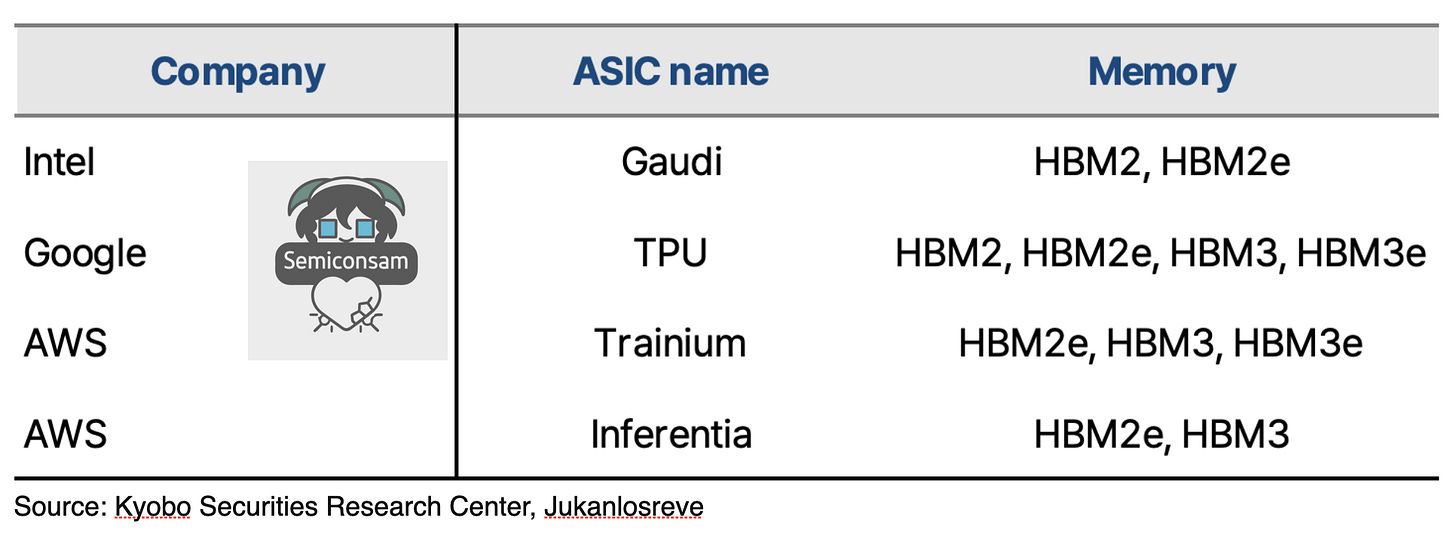

① ASIC: A New Major Consumer of HBM in Semiconductors

Domestic memory manufacturers are already detecting strong demand in the ASIC market, in addition to the GPU-dominated segment, and are supplying HBM products specifically for ASIC semiconductors. Global big tech companies, confronted with NVIDIA’s overwhelming market share of over 90% due to its superior technology and products, are developing accelerators that incorporate custom ASICs—designed to include only the essential functionalities—to break free from this dominance.

The ASIC semiconductor market is projected to grow from $23 billion to approximately $35 billion. ASICs are advantageous in that they deliver optimal performance for limited functionalities through streamlined circuit design and low power consumption, all while maintaining low production costs. Major global companies such as Google and Amazon are actively working to reduce their reliance on NVIDIA, mitigating the high costs and power challenges associated with GPUs, which bodes well for future ASIC market growth. Moreover, ASIC semiconductors can also experience concurrent growth in the on-device AI domain, as they are custom-built to prioritize rapid input response and device lightweighting.

With AI models transitioning to focus on inference and ASIC manufacturing entering its foundational phase, it is anticipated that by 2025 there will be more than 100% year-over-year growth in HBM demand, accompanied by a shift from predominantly low-spec HBM usage to the increased incorporation of high-performance products.

② DRAM for AI PCs: SOCAMM, the Anticipated Second HBM

SOCAMM (Small Outline Compression Attached Memory Module), a next-generation memory module proposed by NVIDIA, is a cutting-edge technology aimed at the high-performance computing and AI markets. With the anticipated expansion of the edge computing market, SOCAMM is expected to emerge as the second HBM, driving new growth.

Global memory manufacturers are currently conducting internal tests on various types of DRAM modules for AI PCs—including SOCAMM—in response to customer demands. Notably, NVIDIA’s proposed SOCAMM integrates the features of both SoDIMM (Small Outline DIMM) and CAMM (Compression Attached Memory Module). It is expected to deliver on-board RAM-level computational speed in PC and laptop environments while enabling mass production and offering a detachable form factor.

At GTC 2025, NVIDIA unveiled DGX Spark and DGX Station, outlining its vision for expanding the personal AI PC market. Building on this vision, Micron, in collaboration with NVIDIA, introduced an LPDDR5X-based SOCAMM featuring:

1. A compact size (14x90mm, approximately one-third the size of an RDIMM),

2. High bandwidth (utilizing a 16-layer vertical stacking technology in LPDDR5X to boost bandwidth by more than 2.5 times, up to 8,533 MT/s),

3. High memory capacity (up to 128GB), and

4. Low power consumption (approximately one-quarter of typical levels, achieved using PKG technology).

These advancements, driven by NVIDIA’s blueprint, have particularly heightened expectations for advanced substrate design, resulting in rising stock prices for packaging and substrate companies.

③ Next-Generation Mobile DRAM for On-Device AI: LPW/LLW

To implement on-device AI, fast and efficient memory is essential. Consequently, the development and mass production of LPW/LLW—DRAMs optimized for low power consumption—are planned, and growth in the related industry is anticipated.

LPW and LLW are mobile DRAMs manufactured in customized forms to meet specific customer requirements. As related standards are being established, the nomenclature varies among manufacturers. Samsung is currently developing LPW (Low Power Wide I/O) DRAM and has announced plans for full-scale production beginning in 2028. This DRAM achieves a 166% improvement in input/output speed and a 54% reduction in power consumption compared to the latest mobile memory, LPDDR5X.

SK Hynix, on the other hand, has implemented LLW (Low Latency Wide I/O) DRAM in Apple’s XR device, Vision Pro, as of June 2024. The next-generation mobile DRAM is designed by stacking multiple LPDDR DRAM modules in a stepped configuration and connecting them to a substrate. This approach significantly increases the number of I/O channels, reduces the speed of individual channels to lower power consumption, and enhances overall performance.

Samsung refers to this technology as Vertical Wire Bonding (VWB) packaging technology, while SK Hynix terms it Vertical Fan-Out (VFO) technology. The key difference lies in the formation of copper pillars during the packaging process: Samsung fills with epoxy first and then forms the copper pillars, whereas SK Hynix connects the copper pillars first before filling with epoxy. In the future, these products are expected to be supplied in the form of an Application Processor (AP) and System-in-Package (SiP).

④ The Intensifying Role of NPUs

The technical focus of on-device AI is shifting from the initial training phase to the inference phase. While training large-scale models—requiring substantial computing power and memory bandwidth—has traditionally taken place in the cloud on GPUs, the role of NPUs is becoming increasingly crucial as these trained models are executed in real time on devices. NPUs are evolving in terms of computing efficiency (low-precision operations), low-power design (process miniaturization), real-time processing (cache optimization), support for lightweight models, and SoC integration. Going forward, NPUs will likely advance in tandem with on-device AI toward ultra-low power consumption (2nm, INT4), high performance (100+ TOPS, 3D chips), on-chip learning (SNN), multimodal processing, software integration, and enhanced security. This progression is expected to provide faster and more secure AI experiences in smartphones (successors to the Galaxy S25), autonomous driving (alternatives to Tesla FSD), and IoT, thereby solidifying NPUs as a core on-device AI technology.

In parallel, innovative hardware architectures such as neuromorphic chips (inspired by the human brain) and optical computing are under active research. The unique design approach of neuromorphic chips aligns with ultra-low power consumption, real-time processing, and efficient inference. Neuromorphic chips—semiconductors modeled after the neural network structure of the human brain (neurons and synapses)—differ from conventional von Neumann architectures (CPU, GPU, NPU) by employing in-memory computing, in which data processing and memory are not separated. IBM (TrueNorth, introduced in 2014) and Intel (Loihi 2, introduced in 2021) have released prototypes of neuromorphic chips, and various startups are developing analog-based AI accelerators. Although commercialization and mass production remain constrained by limited software ecosystems and high investment costs, once these technologies become viable, they are expected to enable significantly more powerful on-device AI with considerably lower energy consumption.

2-3. The AI Agent Era: Sustained Growth in Semiconductor Demand

Continued Expansion of AI Servers

Driven by strong demand from North American CSPs and China, overall demand for AI infrastructure remains robust. AI servers are expected to grow by about 28% this year, accounting for approximately 15% of all servers by 2025.

• Amazon (YoY demand forecast +10%) plans to increase server deployments and expand U.S.-based production lines.

• Microsoft (YoY demand forecast +6%) is allocating the majority of its budget to AI servers and accelerating the replacement of legacy platforms to promote multicore cloud computing architectures.

• DeepSeek is sparking increased AI server demand in China, with BBAT reportedly increasing orders for H20 from 500,000 units to between 700,000 and 800,000 units recently.

By Product Category:

• DDR5:

Companies such as ByteDance, Alibaba, and Tencent are increasingly focusing on AI servers, driving up utilization rates at server ODMs (IEIT, Foxconn, Inventec) and significantly boosting DDR5 procurement. Penetration is expected to reach up to 95% by year-end.

• GDDR:

Demand is rising due to the influence of DeepSeek, with particularly strong growth in demand for products like the 4090Ti and RX 7650 GRE, leading to increased demand for GDDR6. Additionally, the launch of the RTX 50 series GPUs is boosting production of GDDR7 products.

• Client SSD:

Inventory reductions by customers are creating replenishment demand. The rising popularity of all-in-one systems (AIOs) driven by DeepSeek, along with the increasing penetration and promotion of edge AI applications, is stimulating demand for high-end client SSDs.

• Enterprise SSD:

Significant reductions in AI model training costs are prompting Chinese network companies to accelerate the expansion of their AI infrastructure.

Increase in Semiconductor Demand Driven by Edge AI Computing Trends

Edge AI (including on-device AI) devices must process vast amounts of data quickly, making high-speed, high-capacity DRAM indispensable. For AI-enabled smartphones, the DRAM’s bandwidth and capacity are becoming increasingly critical for storing model parameters and intermediate computational data. In line with this trend, additional demand for DRAM and NAND flash is projected to rise by +41% and +10%, respectively, compared to current levels. In fact, the Galaxy S25 Ultra now employs 16GB of LPDDR5X, up from 12GB in the S24 Ultra. Mobile DRAM prices have been increasing due to China’s “Old to New” policy and the growing adoption of AI applications and AI servers. With a reduction in LPDDR4X production and limited supply of LPDDR5X products, memory suppliers are witnessing an aggressive buildup of inventory by customers, leading to a steep price surge.

Semiconductor Prices Expected to Strengthen in 2Q25

As customers continue to aggressively secure inventory, memory prices are anticipated to follow an upward trend in 2Q25, effectively marking the end of the price decline observed in 1Q25. In the DRAM market, strong spot market demand for DDR5 products has driven prices higher, with even D3–D4 products experiencing parallel increases—confirming robust inventory buildup among customers. Although both buyers and sellers held high inventory levels in 1Q25, leading to a significant drop in ASP, early shipments of smartphones, servers, and laptops continued amid concerns over tariff policies, and demand for general-purpose servers was stronger than expected in the first half of 2025. Meanwhile, supply is tightening; production capabilities for legacy products are decreasing due to Micron’s increased HBM supply, and CXMT’s production capacity has also been reduced as a result of US equipment supply restrictions.

For NAND flash, suppliers are implementing a price-priority strategy to limit losses and are tightly controlling shipments through continuous production cut policies, resulting in a decrease in transaction volumes.

If you found this article helpful, please share it!